ChatGPT and the Analytic-Synthetic Distinction

February 8th, 2023

Although primarily relegated to the dustbin of philosophy, the distinction between two kinds of propositions, analytic and synthetic, is useful once again in the context of interacting with a large language model like ChatGPT. Kantians, rejoice!

With complete disregard to the formalisms of analytic philosophy:

An analytic proposition is something like "All bachelors are unmarried." This is true by definition. We don't need to go looking for any other facts in order to verify this assertion.

In contrast, a synthetic proposition is something like "It is raining outside." Here the truthfulness of the assertion is contingent on outside facts. Literally. You have to look outside to see if it is raining or not.

Although Quine dutifully pointed out that everything is contingent on outside facts because someone would need to look up the definitions of "bachelors" and "unmarried" there is still some use for this 18th century metaphysical invention.

Another way to look at an analytic proposition is that it requires a translation from one part of a language to another part of a language in order to verify the truthfulness of a given statement. So in Quine's unraveling we can say this: There still needs to be some outside translator to query in order to verify the truth.

Translations, judged on a range of correctness, do not introduce new meanings or new facts. Translations can happen between languages or within languages. English can be translated into French. A baseball box score can be translated into an entertaining paragraph-length description of the game. A spoken command can be translated into JSON data. A translation contains a source text and a translation target in order to generate a target text.

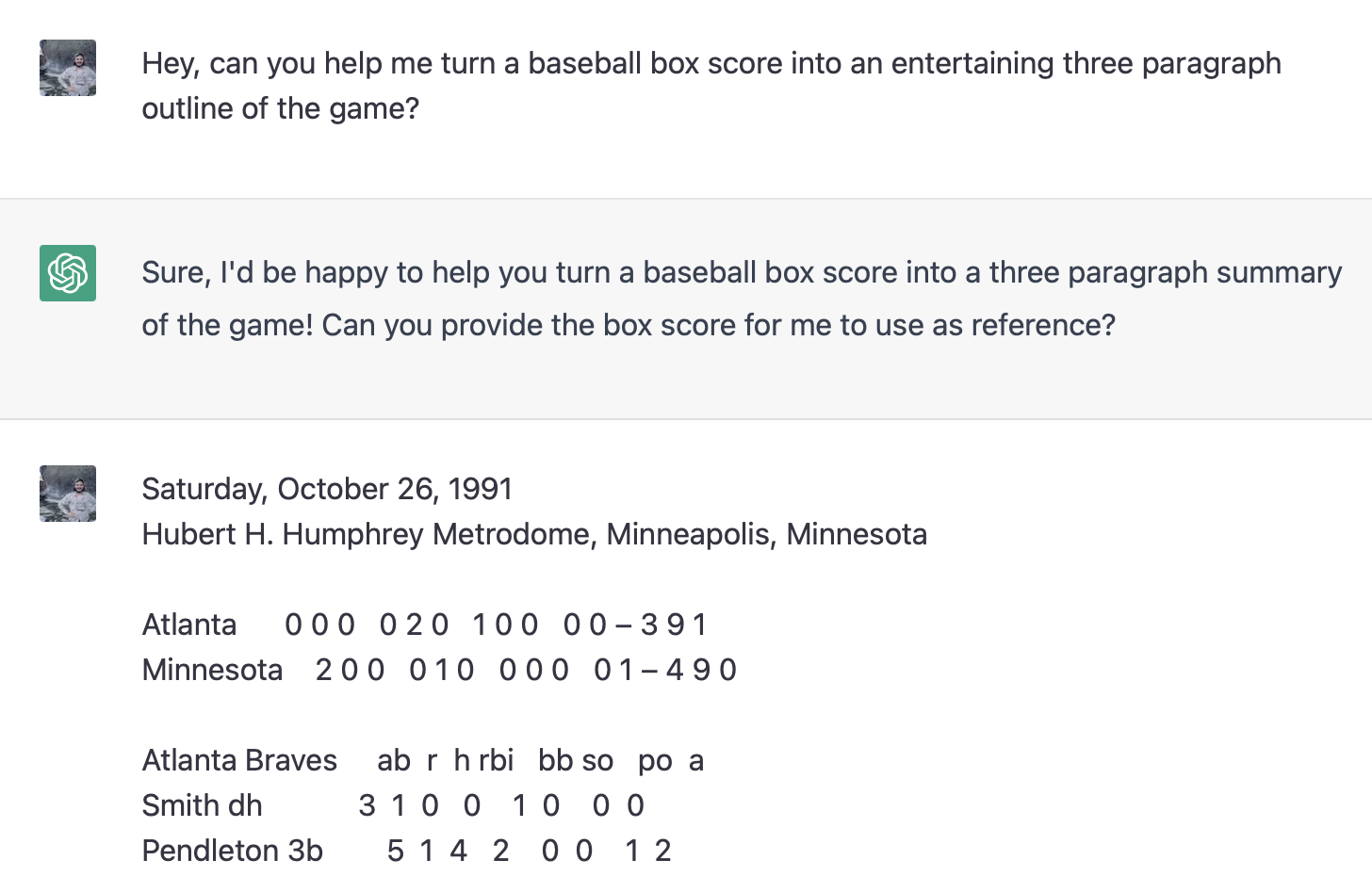

When ChatGPT is given an analytic prompt such as:

All of the necessary facts about the game are contained within the source text, an analytic prompt which contains the complete box score, so no outside information is necessary to translate the text into the target text of an entertaining outline.

Which in practice means that for analytic prompts, ChatGPT is much more likely to produce a factually correct response. You'll notice that while the response is not the specified three paragraphs, it is a factually correct description of Game 6. Only this rather entertaining fact about the importance of the game was not included in the description as there is no mention of the World Series in the box score.

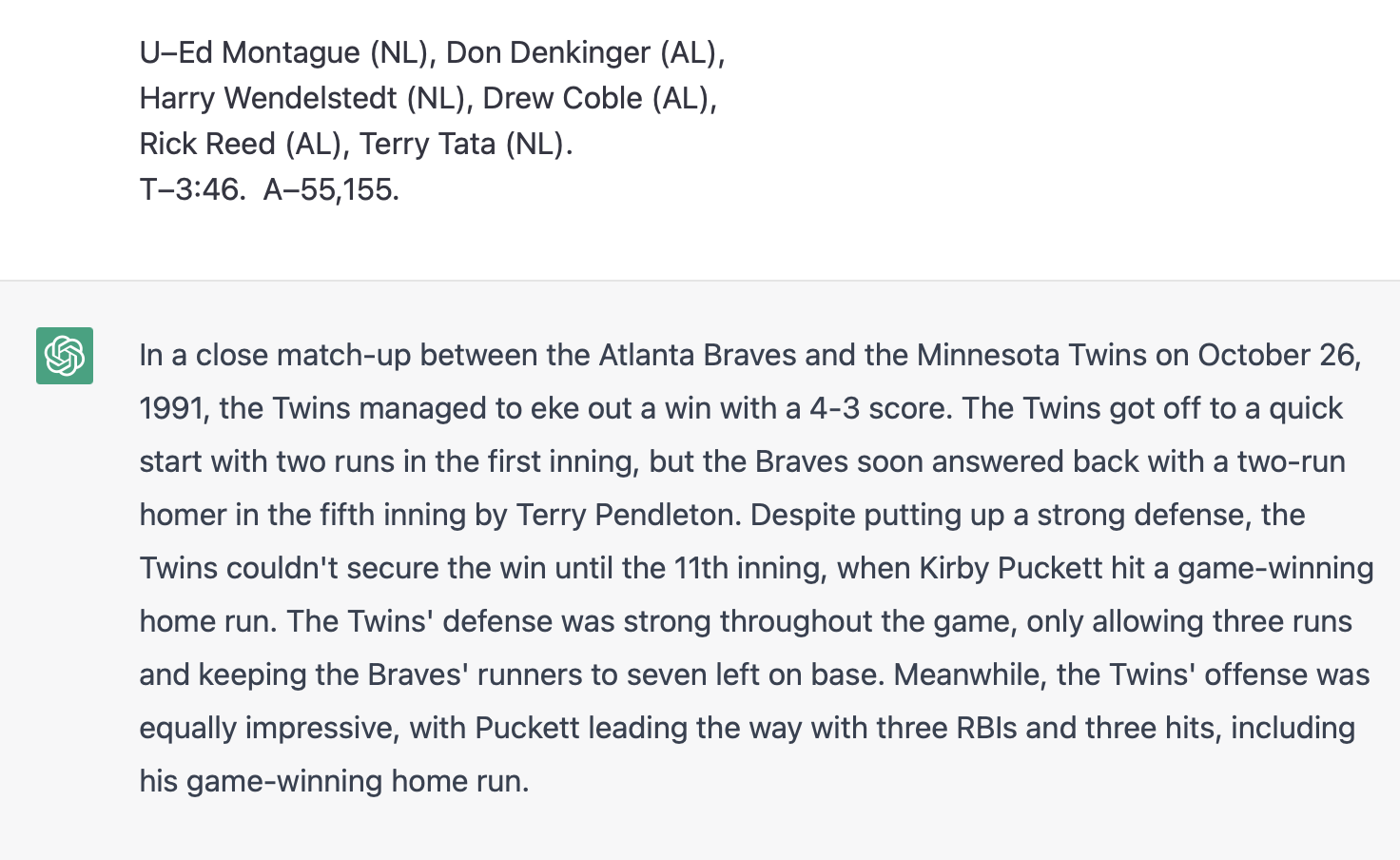

When ChatGPT is given a synthetic prompt:

None of these quotes in the response are factual, at least as far as I can tell. This is really to be expected. ChatGPT will reliably produce fiction when given a synthetic prompt.

So while ChatGPT is a decent translator, it's also a decent synthesizer.

The practice of analytic augmentation is when additional facts are added to a given prompt. Examples range from pipelines utilizing nearest-neighbor vector embeddings to simple hard-coded templates. The more that the role of ChatGPT is made to be a translator, the more factual the results. There's also some kind of contrasting synthetic augmentation that adds fictional information to a prompt in order to promote ChatGPT's capabilities as a synthesizer, with the most fictional of prompts being pure noise. And why not a mix of analytic and synthetic augmentation or a step with one and then a step with another, topped with a dash of paprika?

I agree with Quine, as far as a human considering the nature of some knowledge is concerned, there really should be no distinction. Everything is contingent, especially meaning. As Wittgenstein would point out, it is only through use that we gain meaning. We had to have experienced the use of the words "bachelor" and "unmarried" in a social context in order to understand that they meant the same thing and that the statement was true. We had to have experienced the use of the words "raining" and "outside" in a social context in order to understand that we had to get up and go look outside so we could verify that the statement was true.

Let's play a little game. Let's consider that the term machine learning describes the process by which a large language model like ChatGPT is able in practice to become a reliable translator. And that the machine experienced all of the training data during the process of training the neural network. And that the training data consisted of the use of words in the social context of the world wide web. Can we say that the machine had to have experienced the use of the phrases "box score" and "entertaining outline" in a social context in order to understand that when given a box score to respond with an entertaining outline?

Another game: each time we look at a truthful proposition we experience the truthful assertion of that proposition in two ways, either by 1.) translating language based on our internal model of the world or by 2.) translating language based on our internal model of the world and then confirming based on interaction with our external model of the world. A Bayesian would then take the results of each new experience and then update a set of priors. In terms of perception, the ebb and flow of bottom up and top down processing.